This post introduces VrJpeg, a .NET library to read files created by Google’s CardboardCamera app, and discusses the format of these files. The library is available as a nuget package and on github. The license is Apache v2. It is a small library built on top of the versatile MetadataExtractor.

All the images in the post were captured by myself using a Samsung Galaxy S6.

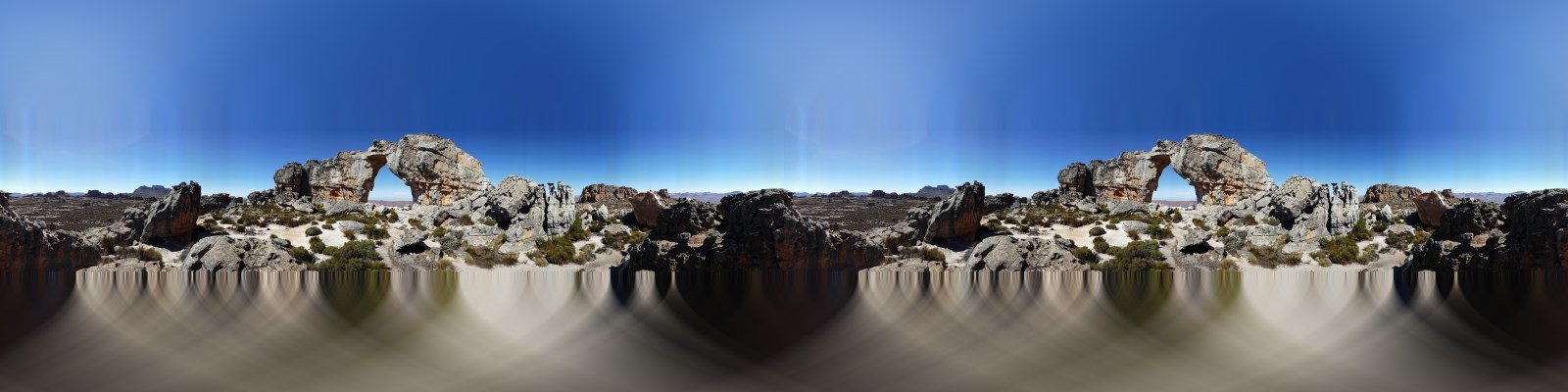

Left/right stereo pano of the Wolfberg Arch in the Cederberg, Western Cape, South Africa. Original file.

The Cardboard Camera app

The CardboardCamera is a mobile app published by Google to create stereoscopic panoramas. Unlike pretty much every other panorama capture app, it is capable of synthesizing a panoramic image for each eye so that the result can be viewed in a VR headset in full stereoscopic glory. A cardboard headset is not necessary for the capture, this is a pure Android app.

To capture a panorama you hold the phone at mid-arm’s length and rotate slowly until the loop is closed. The capture takes about a minute. A device with a gyroscope is required.

An interesting aspect: you can configure it to capture the ambient audio during the turn and have it embedded in the final file.

A rooftop in Downtown Los Angeles. Left eye.

The captured frame covers 360° × 66.01°.

Original file with embedded right eye and 37s of audio.

Oh, the results aren’t perfect. Depending on the scene and the way you hold the camera, you can get banding artifacts, exposure mismatches, binocular rivalry and other festivities. It mostly work most of the time, but you need to be aware of what to avoid and play with the settings.

The important thing to realize though is that for this type of content the immediate next step up in quality is very large and well outside the reach of the general public. You would have to construct an off-center rotating rig and master panorama blending software, or get a hold of one of the spherical camera array like the Google Jump ready GoPro Odyssey (An array of 16 units, to get an idea of the pricing).

A regular off-the-shelf spherical camera can’t produce these images, it’s lacking the parallax.

File format

The files produced by CardboardCamera have the extension .vr.jpg and can be read by regular JPEG viewers. In such a case though, only one eye image can be viewed. The application puts the other eye and the audio content inside the metadata of the JPEG. We knew about left-right and top-bottom layouts for stereoscopic content, this is more like an inside-out layout.

In a nutshell, all the extra content is stored in XMP metadata. XMP supports a special Extended mode with which it is possible to store arbitrary long attachments.

The metadata in the primary XMP section contains the spherical coordinates and coverage of the eye images. This must be read to render the images correctly in a VR viewer, to export full spheres or to manipulate the images. Usually the captures cover the 360° of longitude and less than 70° of latitude.

The extended XMP section contain the full JPEG and MP4 files for the other eye and the audio, encoded in Base64.

While writing this post I realized that Google finally put up a format description online. The reference can be found in Cardboard Camera VR Photo Format. Here are the most important:

| XMP Property | Description |

|---|---|

| GPano:FullPanoWidthPixels | The width of what the full sphere would be. |

| GPano:FullPanoHeightPixels | The height of what the full sphere would be. |

| GPano:CroppedAreaLeftPixels | The x-coordinate of the top-left corner of the image within the full sphere. |

| GPano:CroppedAreaTopPixels | The y-coordinate of the top-left corner of the image within the full sphere. |

| GPano:CroppedAreaImageWidthPixels | The width of the image. |

| GPano:CroppedAreaImageHeightPixels | The height of the image. |

| GImage:Mime | Mime type of the embedded image. Ex: “image/jpeg”. |

| GImage:Data | Base64 encoded image. |

| GAudio:Mime | Mime type of the embedded audio. Ex: “audio/mp4”. |

| GAudio:Data | Base64 encoded audio. |

VrJpeg .NET library

The lib was started late 2015, shortly after Google published the app, to be able to read the files in an omni-stereo viewer for the Rift DK2.

It is a pretty thin layer around MetadataExtractor, a .NET library to read all kinds of metadata in image files, with a few fixes to accommodate Google’s specifics and a few higher level entry points for convenience. I’m no longer updating the viewer for the DK2, but I’m using the library to convert images for consumption in the GearVR.

Following are some code samples showing the typical usage and the abstraction levels.

- High level: create a full equirectangular stereo-panorama with poles filled in.

bool fillPoles = true;

int maxWidth = 8192;

Bitmap stereoPano = VrJpegHelper.CreateStereoEquirectangular(input, EyeImageGeometry.OverUnder, fillPoles, maxWidth);

stereoPano.save("stereopano.png");

- Mid level abstraction: extract the right-eye from the metadata, then convert it to an equirectangular image.

var xmpDirectories = VrJpegMetadataReader.ReadMetadata(input);

GPanorama pano = new GPanorama(xmpDirectories.ToList());

Bitmap right = VrJpegHelper.ExtractRightEye(pano);

Bitmap rightEquir = VrJpegHelper.Equirectangularize(right, pano);

rightEquir.save("right-equir.png");

- Low level abstraction: extract right-eye JPEG bytes and audio MP4 bytes directly from the metadata.

var xmpDirectories = VrJpegMetadataReader.ReadMetadata(input);

GPanorama pano = new GPanorama(xmpDirectories.ToList());

File.WriteAllBytes("right-eye.jpg", pano.ImageData);

File.WriteAllBytes("audio.mp4", pano.AudioData);

Pole filling

There is a pole filling argument to the Equirectangularize() function. This attempts to fill the zenith and nadir holes left by the limited vertical resolution. We don’t have any data for those but filling in with color roughly reminiscent from the scene is still more pleasing to the eye than the harsh separation and the void.

The strategy is to average pixels taken from the topmost row if filling the zenith and the bottommost row if filling the nadir. For each pixel, the number of neighbors used in the average is a (non-linear) mapping over the latitude of the target pixel. At the pole itslef it averages the entire row, while lower latitudes have tighter neighborhoods to retain local trends. The approach is based on the idea described by Leo Sutic in Filling in the blanks. In my version I use cosine as the mapping function.

This is pretty effective on plain sky zeniths, less so when objects cross the frame boundary.

Paarl Rock, Western Cape, South Africa. The captured frame covers 360° × 62.95°.

Equirectangular version with poles filled.

Campsite. The captured frame covers 360° × 65.09°.

Pole filling with objects crossing the frame boundary.

Elsewhere

For some reason there is still a distinct lack of tools published that manipulate the .vr.jpg files or take advantage of the app.

- Split and Join online tool online by VectorCult and the accompanying blog post.

- The original announcement blog post at Google VR.

Remarks

The prospect of being able to share stereo-panoramic memories is very appealing. To immerse your family or friends into your vacation pics is pretty cool, and a much better experience than just watching a flat picture. In this regards the ambient audio is a nice addition.

It’s clear that to get the best results care has to be taken with regards to background motion, light levels, rotation radius and height and tilt variation. And of course not all scenes are amenable to be captured in this way, it’s only for static content. Objects can’t be too close to the sphere, that’s more a limitation of baked-in stereo, and on the other hand if there is nothing in the near vicinity there is not much point in having the stereo.

There is a lot of experimentation left to do with this app or similar tools. A few ideas: long term time lapses, merging partials to rebuild the full sphere, a way to perform post-processing effects or composite objects in the foreground, capturing underwater scenes, or capturing with non standard methodology like having a very large or small radius for the sphere.

The CardboardCamera will not create the ultimate 6DOF living virtual reality we are waiting for, but to me it is a definite step up from monoscopic spherical images, even those with full coverage. The ease of use of the app for creating that type of content is unmatched. Having this kind of tools casually usable by anyone to capture what they experience is really where things ought to be heading.